4.6 Vector Addition Example with Timing¶

In this example we will look at these new aspects of CUDA coding.

Using code timing to know how fast the code runs.

Experiment to find out whether changes to the code affect the time and to compare running on the host CPU to running on the GPU device.

Add a check for whether a user enters a value for threads per block that is larger than allowed.

Demonstrate that you can run kernel code without using dim3 variables if you are only concerned with the x dimension and using 1D grids and thread blocks.

Show a standard practice of using an additional command line argument for the size of our array.

Show that we can run several device kernel functions, one after the other, from one main program.

The code contains experimental timings for the following conditions:

Running on a single thread on the host CPU.

Running on a single thread on the GPU device (not something we would normally do, but given to show the difference between CPU and GPU cores).

Running on a single block of threads (grid size 1).

Running on a somewhat small number of blocks, using a slightly different version of the loop to perform the addition.

Running on a large number of blocks, as shown in the previous example.

These cases are numbered in comments in the code shown below.

Note

Taking timings and running experiments as shown here is central to the work process during PDC computing. Just as we need to make sure our code is still correct, we also want to determine the best way to run the code to get the best performance and what factors do not really affect the performance.

Using a single block of threads¶

In prior examples, we used one way of mapping the threads to compute each element of the array.

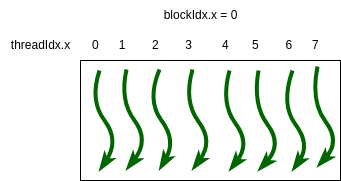

In this section we will explore different mappings of thread blocks in a 1D grid to a 1D array that represents vectors to be added together. We’ll start by envisioning the simplest case from earlier examples, a single block of 8 threads as shown in Figure 4-8.

Figure 4-8. A simple 1D grid with one block of 8 threads.¶

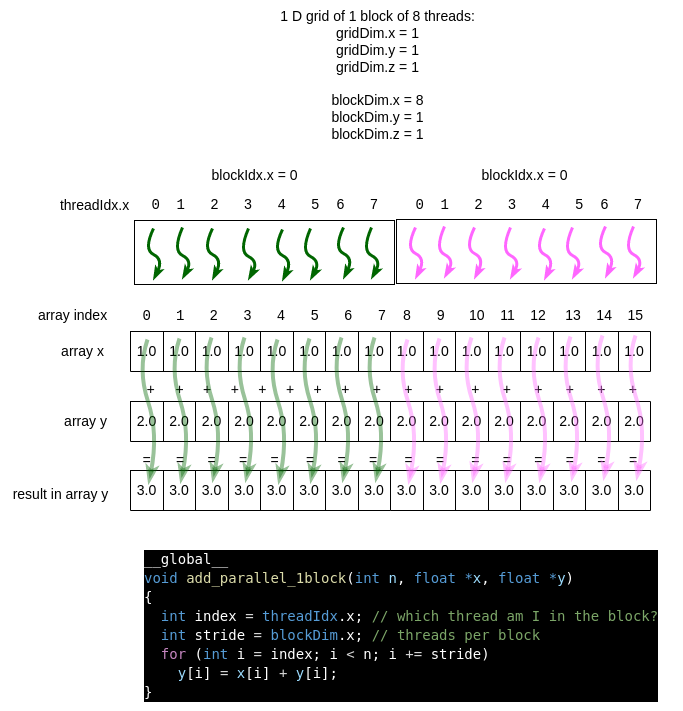

Suppose that we are adding an array of 16 elements to another array of 16 elements using the algorithm for vector addition. There are different ways of setting up a grid of block(s) to complete this task. The first is shown in Figure 4-9, where a single block of 8 threads can be mapped over time to complete 8 computations simultaneously. An example kernel function to do this is shown.

In this case, the block of green threads first works on the first 8 elements of the array. Then in a next larger timestep, the block of threads shown in a magenta color would complete the work.

Figure 4-9 Using one block means less parallelism¶

Pay particular attention to the for loop and how index and stride are set up. Compare the code to the diagram, where the single block is repeated over the top of the array elements it will work on. For 32 elements, imagine 4 larger time steps in this loop, with 8 threads in the block ‘sliding along’ to work on the next 8 elements.

Note

It’s important to understand that the CUDA runtime system takes care of assigning the block of threads to ‘slide along’ your array elements. As a programmer, you are setting up the loop for this and specifying one block. This limits the overall parallelism, mainly because one block runs on one SM on the device, as shown in Figure 4-10.

Using multiple blocks of threads¶

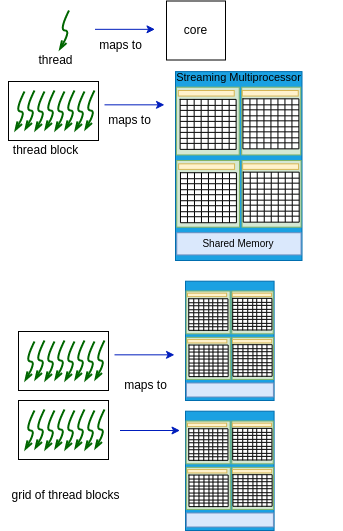

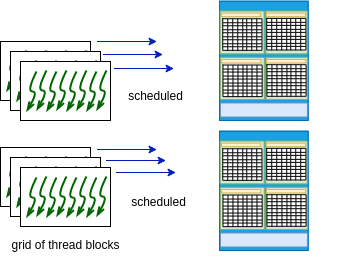

Using multiple blocks of threads in a 1D grid is the most effective way to use an NVIDIA GPU card, since each block will map to a different streaming multiprocessor. Recall this figure from section 4-2:

Figure 4-10 CUDA programming model¶

Fixed grid size method¶

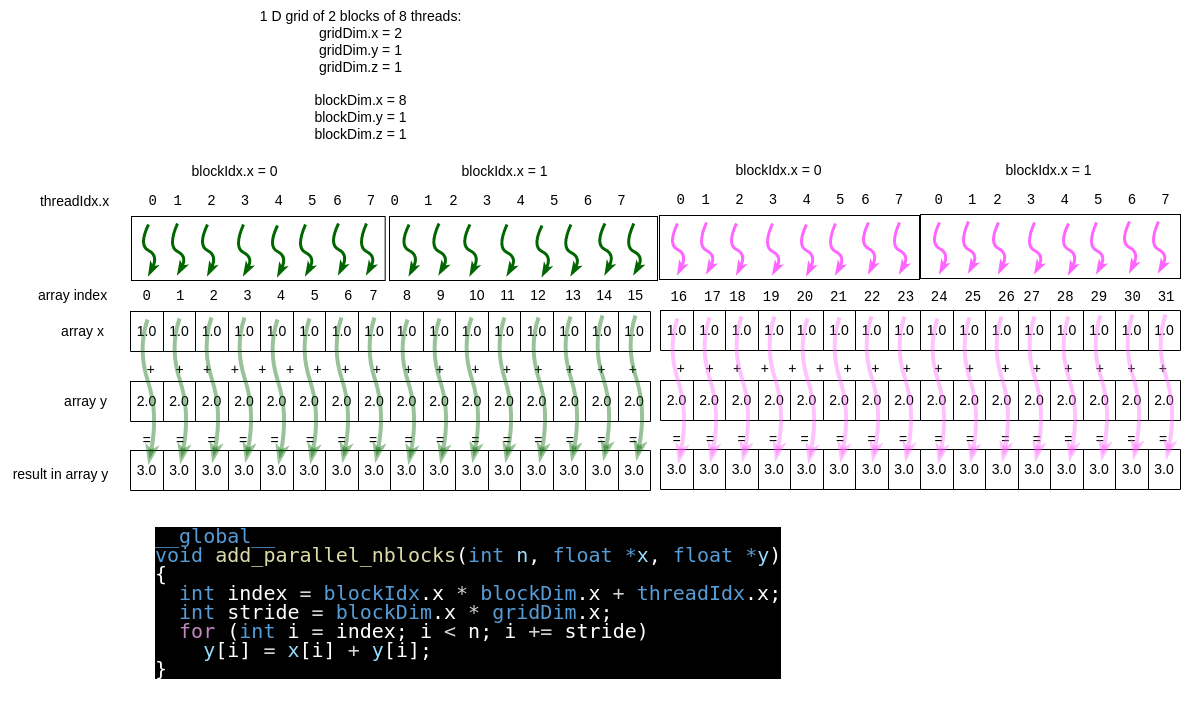

There are different ways of using multiple blocks of threads in our kernel function code. One way is to set a fixed number of blocks in the grid. Let’s illustrate this with an example. Suppose that we use 2 blocks of 8 threads each when we call a kernel function. Further suppose that we have 32 elements in our arrays. This situation is shown in Figure 4-11, including a kernel function that handles this case. Note how with 2 blocks of 8 threads we can perform 16 computations in parallel, then perform 16 more.

Figure 4-11 Fixed number of blocks for the grid size¶

Note that if we increase our array size, for example by doubling it to 64, yet keep the same grid size and block size, the picture above would need four colors to depict the computations that can happen in parallel (in theory- see the next note).

Note

One could argue that the above depiction is not strictly true. The CUDA block scheduler on many devices has gotten really good at making the ‘stride’ version of the code with a fixed grid size run as fast or faster than the following example. You will likely observe this when you run it. It may not be true for other applications, however, so you always need to check and test like we are doing here.

The reason for this is that every core in an SM can actually run multiple threads simultaneously. So the hardware scheduler can assign blocks to run simultaneously on an SM, apparently as efficiently as if the blocks were spread across all SMs, at least for this example and for higher-end GPU cards. So our picture in Figure 4-Y is too simple for modern NVIDIA GPU devices when it comes to scheduling the running threads. The running of the threads is more like Figure 4-12.

Figure 4-12. CUDA schedules blocks to run simultaneously¶

A technical post from NVIDIA states this:

One SM can run several concurrent CUDA blocks depending on the resources needed by CUDA blocks.

We provide this example because you will likely see code examples written like this as you scour the web for CUDA examples. And you may find that some applications perform just a bit better using it. The next method, however, follows what you have seen already: calculate the number of blocks in the grid based on a block size and the number of elements in the array. In theory, this enables you to scale your problem and to use as many streaming multiprocessors on your device as possible.

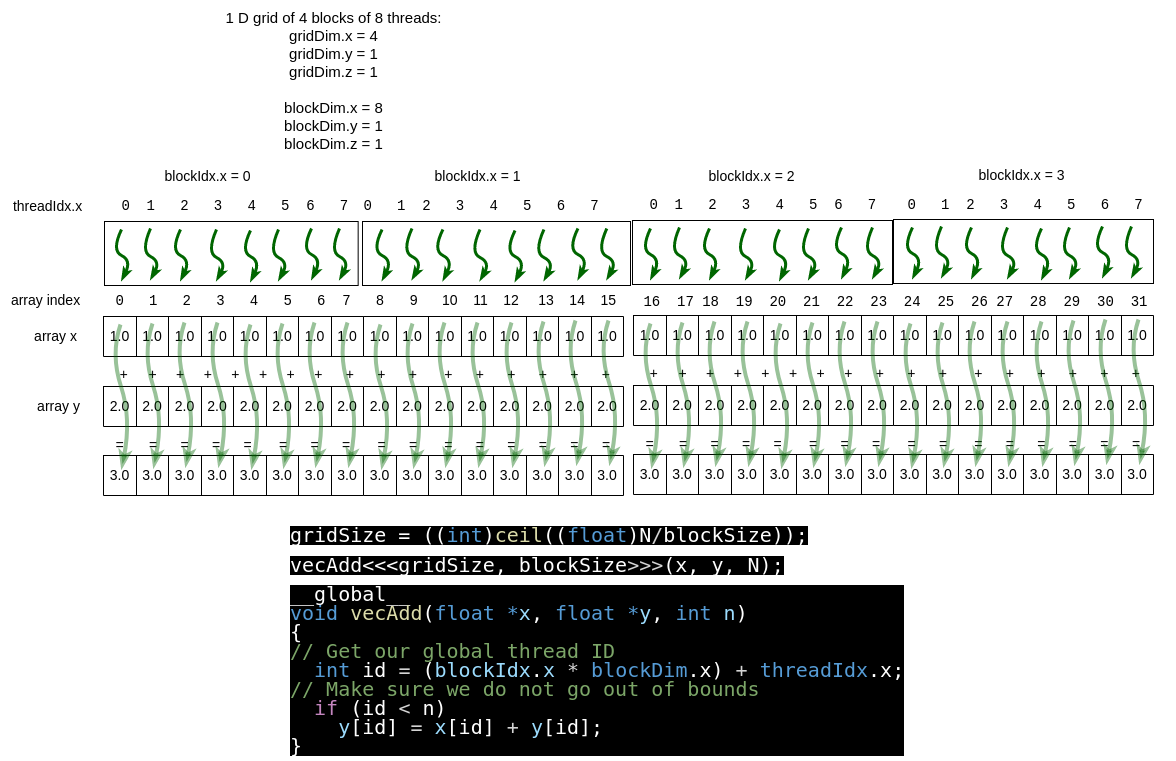

Variable grid size method¶

As the arrays become larger or smaller for a given problem you are working on, or you choose a different number of threads per block (a useful experiment to try for any card you are using), it is preferable to use the array size and the block size to compute the needed 1D grid size.

Though the execution time of this and the previous method may be similar, this method is a useful way to think about CUDA programs: create all the threads you need and map every thread to a particular index in the array.

For example, in the case in Figure 4-11, we looked at doubling the size of the array, but keeping the same number of blocks of threads. Now let’s suppose that we compute a new grid size (blocks per 1D grid) based on the array size and number of threads per block. In this case, we would have the situation given in Figure 4-13. From this, note that we have only one color for the threads because all of the calculations can be done in parallel.

So as the problem size (length of the array in this case) grows, we should be able to take full advantage of the architecture.

Figure 4-13 Number of blocks based on array size and block size¶

Note

Using this method, as array sizes get very large, the grid size needed will exceed the number of SMs on your device. However, as we mentioned before, the system will take care of re-assigning cores on an SM to a new portion of the computation for you.

The complete code¶

File: 4-UMVectorAdd-timing/vectorAdd.cu

//

// Demonstration using a single 1D grid and

// 0, 1, or more 1D blocks of optional size

//

/*

* Example of vector addition :

* Array of floats x is added to array of floats y and the

* result is placed back in y

*

* Timng added for analysis of block size differences.

*/

#include <math.h>

#include <iostream> // alternative cout print for illustration

#include <time.h>

#include <cuda.h>

void initialize(float *x, float *y, int N);

void verifyCorrect(float *y, int N);

void getArguments(int argc, char **argv, int *blockSize, int *numElements);

///////

// error checking macro taken from Oakridge Nat'l lab training code:

// https://github.com/olcf/cuda-training-series

////////

#define cudaCheckErrors(msg) \

do { \

cudaError_t __err = cudaGetLastError(); \

if (__err != cudaSuccess) { \

fprintf(stderr, "Fatal error: %s (%s at %s:%d)\n", \

msg, cudaGetErrorString(__err), \

__FILE__, __LINE__); \

fprintf(stderr, "*** FAILED - ABORTING\n"); \

exit(1); \

} \

} while (0)

// To run code on host for comparison

void HostAdd(int n, float *x, float *y)

{

for (int i = 0; i < n; i++)

y[i] = x[i] + y[i];

}

// Kernel function to add the elements of two arrays

// This one is sequential on one GPU core.

__global__

void add(int n, float *x, float *y)

{

for (int i = 0; i < n; i++)

y[i] = x[i] + y[i];

}

// Parallel version that uses threads in the block.

//

// If block size is 8, e.g.

// thread 0 works on index 0, 8, 16, 24, etc. of each array

// thread 1 works on index 1, 9, 17, 25, etc.

// thread 2 works on index 2, 10, 18, 26, etc.

//

// This is mapping a 1D block of threads onto these 1D arrays.

__global__

void add_parallel_1block(int n, float *x, float *y)

{

int index = threadIdx.x; // which thread am I in the block?

int stride = blockDim.x; // threads per block

for (int i = index; i < n; i += stride)

y[i] = x[i] + y[i];

}

// In this version, thread number is its block number

// in the grid (blockIdx.x) times

// the threads per block plus which thread it is in that block.

//

// Then the 'stride' to the next element in the array goes forward

// by multiplying threads per block (blockDim.x) times

// the number of blocks in the grid (gridDim.x).

__global__

void add_parallel_nblocks(int n, float *x, float *y)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

int stride = blockDim.x * gridDim.x;

for (int i = index; i < n; i += stride)

y[i] = x[i] + y[i];

}

// Kernel function based on 1D grid of 1D blocks of threads

// In this version, thread number is:

// its block number in the grid (blockIdx.x) times

// the threads per block plus which thread it is in that block.

//

// This thread id is then the index into the 1D array of floats.

// This represents the simplest type of mapping:

// Each thread takes care of one element of the result

//

// For this to work, the number of blocks specified

// times the specified threads per block must

// be the same or greater than the size of the array.

__global__

void vecAdd(float *x, float *y, int n)

{

// Get our global thread ID

int id = (blockIdx.x * blockDim.x) + threadIdx.x;

// Make sure we do not go out of bounds

if (id < n)

y[id] = x[id] + y[id];

}

/// temp test

int main(int argc, char **argv)

{

printf("This program lets us experiment with the number of blocks and the \n");

printf("number of threads per block to see its effect on running time.\n");

printf("\nUsage:\n");

printf("%s [num threads per block] [array_size]\n\n", argv[0]);

printf("\nwhere you can specify only the number of threads per block \n");

printf("and the number of blocks will be calculated based on the size\n");

printf("of the array.\n\n");

// Set up size of arrays

// multiple of 1024 to match largest threads per block

// allowed in many NVIDIA GPUs

//

int N = 32*1048576;

int blockSize = 256; // threads per block

float *x, *y;

// determine largest number of threads per block allowed

int devId; // the number assigned to the GPU

int maxThreadsPerBlock; // maximum threads available per block

cudaGetDevice(&devId);

cudaDeviceGetAttribute(&maxThreadsPerBlock,

cudaDevAttrMaxThreadsPerBlock, devId);

// get optional arguments

getArguments(argc, argv, &blockSize, &N);

//change if requested block size is too large

if (blockSize > maxThreadsPerBlock) {

blockSize = maxThreadsPerBlock;

printf("WARNING: using %d threads per block, which is the maximum.", blockSize);

}

printf("size (N) of 1D array is: %d\n\n", N);

// Size, in bytes, of each vector; just use below

size_t bytes = N*sizeof(float);

// Allocate Unified Memory – accessible from CPU or GPU

cudaMallocManaged(&x, bytes);

cudaMallocManaged(&y, bytes);

cudaCheckErrors("allocate managed memory");

// initialize x and y arrays on the host

initialize(x, y, N);

clock_t t_start, t_end; // for timing

double tot_time_secs;

double tot_time_milliseconds;

///////////////////////////////////////////////////////////////////

// case 1: run on the host on one core

t_start = clock();

// sequentially on the host

HostAdd(N, x, y);

t_end = clock();

tot_time_secs = ((double)(t_end-t_start)) / CLOCKS_PER_SEC;

tot_time_milliseconds = tot_time_secs*1000;

printf("\nSequential time on host: %f seconds (%f milliseconds)\n", tot_time_secs, tot_time_milliseconds);

verifyCorrect(y, N);

///////////////////////////////////////////////////////////////////

// case 2:

// Purely illustration of something you do not ordinarilly do:

// Run kernel on all elements on the GPU sequentially on one thread

// re-initialize

initialize(x, y, N);

t_start = clock();

add<<<1, 1>>>(N, x, y); // the kernel call

cudaCheckErrors("add kernel call");

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

cudaCheckErrors("Failure to synchronize device");

t_end = clock();

tot_time_secs = ((double)(t_end-t_start)) / CLOCKS_PER_SEC;

tot_time_milliseconds = tot_time_secs*1000;

printf("\nSequential time on one device thread: %f seconds (%f milliseconds)\n", tot_time_secs, tot_time_milliseconds);

verifyCorrect(y, N);

///////////////////////////////////////////////////////////////////

// case 3: using a single block of 256 threads

// re-initialize x and y arrays on the host

initialize(x, y, N);

// Use the GPU in parallel with one block of threads.

// Essentially using one SM for the block.

t_start = clock();

add_parallel_1block<<<1, 256>>>(N, x, y); // the kernel call

cudaCheckErrors("add_parallel_1block kernel call");

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

cudaCheckErrors("Failure to synchronize device");

t_end = clock();

tot_time_secs = ((double)(t_end-t_start)) / CLOCKS_PER_SEC;

tot_time_milliseconds = tot_time_secs*1000;

printf("\nParallel time on 1 block of 256 threads: %f milliseconds\n", tot_time_milliseconds);

verifyCorrect(y, N);

///////////////////////////////////////////////////////////////////

// Case 4:

// Now use multiple blocks so that we use more than one SM.

// Also use a slightly different 'stride' pattern for which

// thread works on which element.

// re-initialize x and y arrays on the host

initialize(x, y, N);

// Number of thread blocks in grid could be fixed

// and smaller than maximum needed.

int gridSize = 16;

printf("\n----------- number of %d-thread blocks: %d\n", blockSize, gridSize);

t_start = clock();

// the kernel call assuming a fixed grid size and using a stride

add_parallel_nblocks<<<gridSize, blockSize>>>(N, x, y);

cudaCheckErrors("add_parallel_nblocks kernel call");

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

cudaCheckErrors("Failure to synchronize device");

t_end = clock();

tot_time_secs = ((double)(t_end-t_start)) / CLOCKS_PER_SEC;

tot_time_milliseconds = tot_time_secs*1000;

printf("Stride loop pattern: \n");

printf("Parallel time on %d blocks of %d threads = %f milliseconds\n", gridSize, blockSize, tot_time_milliseconds);

verifyCorrect(y, N);

//////////////////////////////////////////////////////////////////

// case 5: withot using stride

//

// re-initialize x and y arrays on the host

initialize(x, y, N);

// set grid size based on array size and block size

gridSize = ((int)ceil((float)N/blockSize));

printf("\n----------- number of %d-thread blocks: %d\n", blockSize, gridSize);

t_start = clock();

// the kernel call

vecAdd<<<gridSize, blockSize>>>(x, y, N);

cudaCheckErrors("vecAdd kernel call");

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize();

cudaCheckErrors("Failure to synchronize device");

t_end = clock();

tot_time_secs = ((double)(t_end-t_start)) / CLOCKS_PER_SEC;

tot_time_milliseconds = tot_time_secs*1000;

printf("No stride loop pattern: \n");

printf("Parallel time on %d blocks of %d threads = %f milliseconds\n", gridSize, blockSize, tot_time_milliseconds);

verifyCorrect(y, N);

///////////////////////////// end of tests

// Free memory

cudaFree(x);

cudaFree(y);

cudaCheckErrors("free cuda memory");

return 0;

}

// To reset the arrays for each trial

void initialize(float *x, float *y, int N) {

// initialize x and y arrays on the host

for (int i = 0; i < N; i++) {

x[i] = 1.0f;

y[i] = 2.0f;

}

}

// check whether the kernel functions worked as expected

void verifyCorrect(float *y, int N) {

// Check for errors (all values should be 3.0f)

float maxError = 0.0f;

for (int i = 0; i < N; i++)

maxError = fmax(maxError, fabs(y[i]- 3.0f));

std::cout << "Max error: " << maxError << std::endl;

}

// simple argument gather for this simple 1D example program

//

// Design is the arguments will be optional in this order:

// number of threads per block

// number of data elements in 1D vector arrays

void getArguments(int argc, char **argv, int *blockSize, int *numElements) {

if (argc == 3) { // both given

*numElements = atoi(argv[2]);

*blockSize = atoi(argv[1]);

} else if (argc == 2) { // just threads per block given

*blockSize = atoi(argv[1]);

}

}

Look through this code carefully and answer this question.

- It is valid and will run using a 1D grid of 128 blocks of 100 threads each.

- Look at the case of one block of 256 threads.

- It is valid and will run using a 1D grid of 1000 blocks of 128 threads each.

- Yes! The grid size as an integer can be used and is the first argument.

- It is invalid.

- Note how two cases above do not use dim3 arguments.

4.6-1: Which is true about the following kernel function call?

vecAdd<<<1000, 128>>>(x, y, N);

Build and run¶

Just as for previous examples, you can use the make command on your own machine or compile the code like this:

nvcc -arch=native -o vectorAdd vectorAdd.cu

Remember that you will need to use a different -arch flag if native does not work for you. (See note at end of section 4.1.)

You can execute this code like this:

./vectorAdd

Some typical output looks like this:

This program lets us experiment with the number of blocks and the

number of threads per block to see its effect on running time.

Usage:

./vectorAdd [num threads per block] [array_size]

where you can specify only the number of threads per block

and the number of blocks will be calculated based on the size

of the array.

size (N) of 1D array is: 33554432

Sequential time on host: 0.090067 seconds (90.067000 milliseconds)

Max error: 0

Sequential time on one device thread: 3.359815 seconds (3359.815000 milliseconds)

Max error: 0

Parallel time on 1 block of 256 threads: 95.871000 milliseconds

Max error: 0

----------- number of 256-thread blocks: 16

Stride loop pattern:

Parallel time on 16 blocks of 256 threads = 66.873000 milliseconds

Max error: 0

----------- number of 256-thread blocks: 131072

No stride loop pattern:

Parallel time on 131072 blocks of 256 threads = 64.726000 milliseconds

Max error: 0

Let’s consider what you see from this by answering the following questions.

- GPU cores are faster than CPU cores.

- Look carefully at the case of one device thread.

- CPU cores are faster than GPU cores.

- Yes! The single GPU thread case ran much slower than the single CPU thread case.

- The speed of CPU and GPU cores are comparable.

- Look carefully at the case of one device thread.

4.6-2: What can we infer about the speed of the CPU and GPU cores?

- True.

- Look carefully at the case of one block.

- False.

- Yes! The single block case ran much slower than when using multiple blocks of threads.

4.6-3: Using one block of threads is a reasonable choice for this example.

For this next question, your results may vary from others, so it’s useful to record your observations.

- 4.6-4: Try running multiple times

Now try running this example under the default conditions several times (try 5 times). What do you observe about the times for the last two cases where multiple blocks are used? (Note that differences of a few milliseconds are small.)

You can also experiment with trying a smaller or larger block size, by running like this:

./vectorAdd 128

./vectorAdd 512

Note

An important point about the design of the NVIDIA cards is that the block size should be a multiple of 32 and that for today’s cards, experiments seem to show that block sizes of 128, 256, or 512 are preferred choices for the design of the hardware.

For further experimentation you could try an array size of 67108864, which is double the default, using different block sizes. The array size is a second argument, like this:

./vectorAdd 128 67108864

This case brings up an interesting observation that you can make for this particular example code. Figure it out by answering this question:

- True.

- Yes! In this case, the simple algorithm is O(N), so this makes sense for the sequential version on the CPU or one GPU core. What's also useful is that it is still using thousands of cores, which could cause overhead, but not enough to hurt us in this case.

- False.

- Try running each case with the same block size a few more times to determine if it really is true.

4.6-5: When we double the size of our problem, the code takes roughly twice the time to run for each case.

Summary¶

This example shows that a host CPU is faster than a single core on a GPU by quite some margin. So to us GPUs effectively, you need to use as many cores as possible in parallel to complete the computation. From this example, you can see that this is possible when the mapping of cores to data elements is straightforward and the computation on each data element is simple (though this example still works well with more sophisticated mathematical calculations involving single elements of each array).

In Araujo(2023), the authors performed an extensive study to determine the affect of the block size on a variety of different benchmark code examples. They concluded that under some circumstances the block size had very small effects on the runtime, but for other cases, keeping it small or large made a considerable difference on how fast the codes ran. Here you likely will see minor effects for vector addition, but in other cases you may not, so it is best to design your code so that you can change it and run experiments. It still holds from their results that block sizes of 128, 256, or 512 are preferred choices.

In this same study, the authors ran their experiments 10 times for the same conditions, getting an average time. This is also a practice you will want to get into the habit of when testing out your code. You should have seen variation in your timing results as you ran the same condition multiple times.

Exercises for Further Exploration¶

Given this example of how the code can be timed on the host, go back and add timing to the code that did not use unified memory. The results will enable you to determine whether our assertion that using unified memory is the preferred method is true for this example.

Another exercise is to consider when the 5th method, using one thread id per array index and calculate the number of blocks, could fail. Though likely a rare case, it is worth thinking about. To do it, go back to the information about your device and determine the maximum number of blocks allowed in a grid.

For case 4, experiment with changing the fixed number of blocks. Is there any case where the time is consistently better or worse than the case where we calculate the number of blocks in the grid based on N and the block size?

There is an example provided in our GitHub repository where CUDA library functions are used for timing the code instead of C timing functions on the host. If you want to explore this example, you can see how CUDA has also provided mechanisms for timing code that can sometimes be useful for adding timing to sophisticated kernel or device functions.

References¶

Araujo, G., Griebler, D., Rockenbach, D. A., Danelutto, M., & Fernandes, L. G. (2023). NAS Parallel Benchmarks with CUDA and beyond. Software: Practice and Experience, 53(1), 53-80.